Comprehensive Protection

Detect and block toxic content, sensitive data, jailbreak attempts, and malicious requests

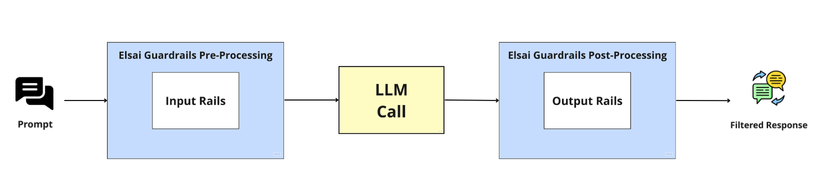

Elsai Guardrails provides a comprehensive solution for securing your LLM-based applications. With built-in protection against common threats and flexible configuration options, you can ensure your AI applications are safe and compliant.

from elsai_guardrails.guardrails import LLMRails

# Initialize with configuration

rails = LLMRails.from_config("config.yml")

# Safe LLM calls with automatic guardrails

response = rails.generate(

messages=[{"role": "user", "content": "Hello!"}]

)Version 0.1.1 introduces powerful new features:

See What's New | Release Notes

Ready to secure your LLM application? Check out our Installation Guide to get started in minutes!